mirror of

https://git.mirrors.martin98.com/https://github.com/infiniflow/ragflow.git

synced 2025-08-10 19:28:58 +08:00

Miscellaneous UI updates (#6947)

### What problem does this PR solve? ### Type of change - [x] Documentation Update

This commit is contained in:

parent

4b125f0ffe

commit

6051abb4a3

@ -119,7 +119,7 @@ No, this feature is not supported.

|

||||

Yes, we support enhancing user queries based on existing context of an ongoing conversation:

|

||||

|

||||

1. On the **Chat** page, hover over the desired assistant and select **Edit**.

|

||||

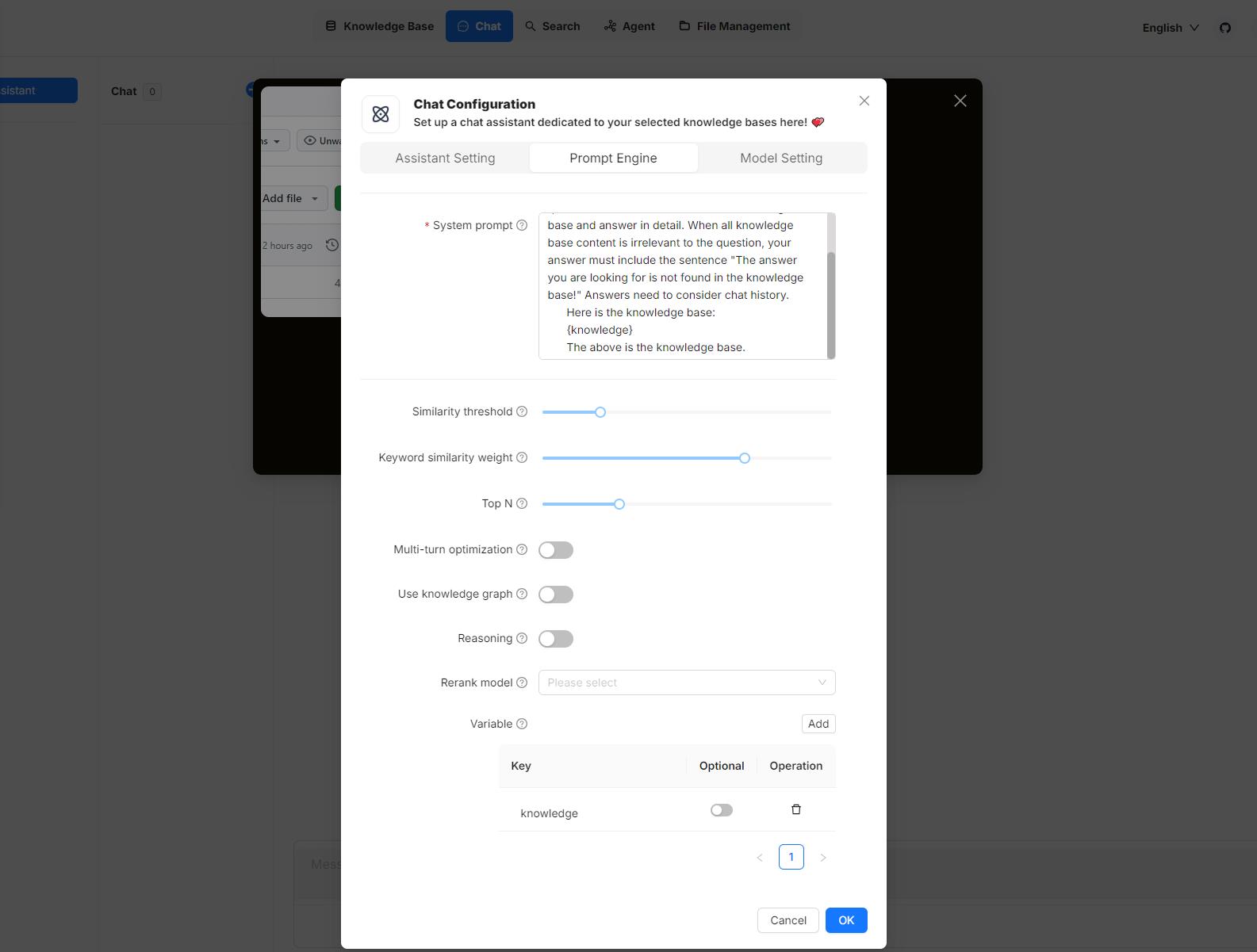

2. In the **Chat Configuration** popup, click the **Prompt Engine** tab.

|

||||

2. In the **Chat Configuration** popup, click the **Prompt engine** tab.

|

||||

3. Switch on **Multi-turn optimization** to enable this feature.

|

||||

|

||||

---

|

||||

@ -419,7 +419,7 @@ It is not recommended to manually change the 32-file batch upload limit. However

|

||||

This error occurs because there are too many chunks matching your search criteria. Try reducing the **TopN** and increasing **Similarity threshold** to fix this issue:

|

||||

|

||||

1. Click **Chat** in the middle top of the page.

|

||||

2. Right-click the desired conversation > **Edit** > **Prompt Engine**

|

||||

2. Right-click the desired conversation > **Edit** > **Prompt engine**

|

||||

3. Reduce the **TopN** and/or raise **Similarity threshold**.

|

||||

4. Click **OK** to confirm your changes.

|

||||

|

||||

|

||||

@ -12,8 +12,8 @@ A checklist to speed up question answering.

|

||||

|

||||

Please note that some of your settings may consume a significant amount of time. If you often find that your question answering is time-consuming, here is a checklist to consider:

|

||||

|

||||

- In the **Prompt Engine** tab of your **Chat Configuration** dialogue, disabling **Multi-turn optimization** will reduce the time required to get an answer from the LLM.

|

||||

- In the **Prompt Engine** tab of your **Chat Configuration** dialogue, leaving the **Rerank model** field empty will significantly decrease retrieval time.

|

||||

- In the **Prompt engine** tab of your **Chat Configuration** dialogue, disabling **Multi-turn optimization** will reduce the time required to get an answer from the LLM.

|

||||

- In the **Prompt engine** tab of your **Chat Configuration** dialogue, leaving the **Rerank model** field empty will significantly decrease retrieval time.

|

||||

- When using a rerank model, ensure you have a GPU for acceleration; otherwise, the reranking process will be *prohibitively* slow.

|

||||

|

||||

:::tip NOTE

|

||||

|

||||

@ -17,7 +17,7 @@ In RAGFlow, variables are closely linked with the system prompt. When you add a

|

||||

|

||||

## Where to set variables

|

||||

|

||||

Hover your mouse over your chat assistant, click **Edit** to open its **Chat Configuration** dialogue, then click the **Prompt Engine** tab. Here, you can work on your variables in the **System prompt** field and the **Variable** section:

|

||||

Hover your mouse over your chat assistant, click **Edit** to open its **Chat Configuration** dialogue, then click the **Prompt engine** tab. Here, you can work on your variables in the **System prompt** field and the **Variable** section:

|

||||

|

||||

|

||||

|

||||

@ -33,7 +33,7 @@ In the **Variable** section, you add, remove, or update variables.

|

||||

It does not currently make a difference whether you set `{knowledge}` to optional or mandatory, but note that this design will be updated at a later point.

|

||||

:::

|

||||

|

||||

From v0.17.0 onward, you can start an AI chat without specifying knowledge bases. In this case, we recommend removing the `{knowledge}` variable to prevent unnecessary references and keeping the **Empty response** field empty to avoid errors.

|

||||

From v0.17.0 onward, you can start an AI chat without specifying knowledge bases. In this case, we recommend removing the `{knowledge}` variable to prevent unnecessary reference and keeping the **Empty response** field empty to avoid errors.

|

||||

|

||||

### Custom variables

|

||||

|

||||

@ -46,13 +46,15 @@ Besides `{knowledge}`, you can also define your own variables to pair with the s

|

||||

|

||||

## 2. Update system prompt

|

||||

|

||||

After you add or remove variables in the **Variable** section, ensure your changes are reflected in the system prompt to avoid inconsistencies and errors. Here's an example:

|

||||

After you add or remove variables in the **Variable** section, ensure your changes are reflected in the system prompt to avoid inconsistencies or errors. Here's an example:

|

||||

|

||||

```

|

||||

You are an intelligent assistant. Please answer the question by summarizing chunks from the specified knowledge base(s)...

|

||||

|

||||

Your answers should follow a professional and {style} style.

|

||||

|

||||

...

|

||||

|

||||

Here is the knowledge base:

|

||||

{knowledge}

|

||||

The above is the knowledge base.

|

||||

|

||||

@ -19,7 +19,7 @@ You start an AI conversation by creating an assistant.

|

||||

|

||||

> RAGFlow offers you the flexibility of choosing a different chat model for each dialogue, while allowing you to set the default models in **System Model Settings**.

|

||||

|

||||

2. Update **Assistant Settings**:

|

||||

2. Update **Assistant settings**:

|

||||

|

||||

- **Assistant name** is the name of your chat assistant. Each assistant corresponds to a dialogue with a unique combination of knowledge bases, prompts, hybrid search configurations, and large model settings.

|

||||

- **Empty response**:

|

||||

@ -28,7 +28,7 @@ You start an AI conversation by creating an assistant.

|

||||

- **Show quote**: This is a key feature of RAGFlow and enabled by default. RAGFlow does not work like a black box. Instead, it clearly shows the sources of information that its responses are based on.

|

||||

- Select the corresponding knowledge bases. You can select one or multiple knowledge bases, but ensure that they use the same embedding model, otherwise an error would occur.

|

||||

|

||||

3. Update **Prompt Engine**:

|

||||

3. Update **Prompt engine**:

|

||||

|

||||

- In **System**, you fill in the prompts for your LLM, you can also leave the default prompt as-is for the beginning.

|

||||

- **Similarity threshold** sets the similarity "bar" for each chunk of text. The default is 0.2. Text chunks with lower similarity scores are filtered out of the final response.

|

||||

|

||||

@ -3,11 +3,11 @@ sidebar_position: 2

|

||||

slug: /deploy_local_llm

|

||||

---

|

||||

|

||||

# Deploy LLM locally

|

||||

# Deploy local models

|

||||

import Tabs from '@theme/Tabs';

|

||||

import TabItem from '@theme/TabItem';

|

||||

|

||||

Run models locally using Ollama, Xinference, or other frameworks.

|

||||

Deploy and run local models using Ollama, Xinference, or other frameworks.

|

||||

|

||||

---

|

||||

|

||||

|

||||

@ -5,7 +5,7 @@ slug: /join_or_leave_team

|

||||

|

||||

# Join or leave a team

|

||||

|

||||

Accept a team invite to join a team, decline a team invite, or leave a team.

|

||||

Accept an invite to join a team, decline an invite, or leave a team.

|

||||

|

||||

---

|

||||

|

||||

@ -23,7 +23,7 @@ You cannot invite users to a team unless you are its owner.

|

||||

## Prerequisites

|

||||

|

||||

1. Ensure that your Email address that received the team invitation is associated with a RAGFlow user account.

|

||||

2. To view and update the team owner's shared knowledge base, the team owner must set a knowledge base's **Permissions** to **Team**.

|

||||

2. The team owner should share his knowledge bases by setting their **Permission** to **Team**.

|

||||

|

||||

## Accept or decline team invite

|

||||

|

||||

|

||||

@ -74,7 +74,7 @@ Released on March 3, 2025.

|

||||

|

||||

### New features

|

||||

|

||||

- AI chat: Implements Deep Research for agentic reasoning. To activate this, enable the **Reasoning** toggle under the **Prompt Engine** tab of your chat assistant dialogue.

|

||||

- AI chat: Implements Deep Research for agentic reasoning. To activate this, enable the **Reasoning** toggle under the **Prompt engine** tab of your chat assistant dialogue.

|

||||

- AI chat: Leverages Tavily-based web search to enhance contexts in agentic reasoning. To activate this, enter the correct Tavily API key under the **Assistant settings** tab of your chat assistant dialogue.

|

||||

- AI chat: Supports starting a chat without specifying knowledge bases.

|

||||

- AI chat: HTML files can also be previewed and referenced, in addition to PDF files.

|

||||

|

||||

@ -554,7 +554,7 @@ export default {

|

||||

selectLanguage: 'Sprache auswählen',

|

||||

reasoning: 'Schlussfolgerung',

|

||||

reasoningTip:

|

||||

'Ob Antworten durch Denkprozesse wie DeepSeek-R1/OpenAI o1 generiert werden sollen. Wenn aktiviert, integriert das Chat-Modell Deep Research autonom während der Beantwortung von Fragen, wenn es auf ein unbekanntes Thema trifft. Dies beinhaltet, dass das Chat-Modell dynamisch externe Kenntnisse durchsucht und endgültige Antworten durch Denkprozesse generiert.',

|

||||

'Ob beim Frage-Antwort-Prozess ein logisches Arbeitsverfahren aktiviert werden soll, wie es bei Modellen wie Deepseek-R1 oder OpenAI o1 der Fall ist. Wenn aktiviert, ermöglicht diese Funktion dem Modell, auf externes Wissen zuzugreifen und komplexe Fragen schrittweise mithilfe von Techniken wie der „Chain-of-Thought“-Argumentation zu lösen. Durch die Zerlegung von Problemen in überschaubare Schritte verbessert dieser Ansatz die Fähigkeit des Modells, präzise Antworten zu liefern, was die Leistung bei Aufgaben, die logisches Denken und mehrschrittige Überlegungen erfordern, steigert.',

|

||||

tavilyApiKeyTip:

|

||||

'Wenn hier ein API-Schlüssel korrekt eingestellt ist, werden Tavily-basierte Websuchen verwendet, um den Abruf aus der Wissensdatenbank zu ergänzen.',

|

||||

tavilyApiKeyMessage: 'Bitte geben Sie Ihren Tavily-API-Schlüssel ein',

|

||||

|

||||

@ -405,7 +405,7 @@ This auto-tagging feature enhances retrieval by adding another layer of domain-s

|

||||

newConversation: 'New conversation',

|

||||

createAssistant: 'Create an Assistant',

|

||||

assistantSetting: 'Assistant settings',

|

||||

promptEngine: 'Prompt Engine',

|

||||

promptEngine: 'Prompt engine',

|

||||

modelSetting: 'Model Setting',

|

||||

chat: 'Chat',

|

||||

newChat: 'New chat',

|

||||

@ -533,7 +533,7 @@ This auto-tagging feature enhances retrieval by adding another layer of domain-s

|

||||

selectLanguage: 'Select a language',

|

||||

reasoning: 'Reasoning',

|

||||

reasoningTip:

|

||||

'It will trigger reasoning process like Deepseek-R1/OpenAI o1. Integrates an agentic search process into the reasoning workflow, allowing models itself to dynamically retrieve external knowledge whenever they encounter uncertain information.',

|

||||

`Whether to enable a reasoning workflow during question answering, as seen in models like Deepseek-R1 or OpenAI o1. When enabled, this allows the model to access external knowledge and tackle complex questions in a step-by-step manner, leveraging techniques like chain-of-thought reasoning. This approach enhances the model's ability to provide accurate responses by breaking down problems into manageable steps, improving performance on tasks that require logical reasoning and multi-step thinking.`,

|

||||

tavilyApiKeyTip:

|

||||

'If an API key is correctly set here, Tavily-based web searches will be used to supplement knowledge base retrieval.',

|

||||

tavilyApiKeyMessage: 'Please enter your Tavily API Key',

|

||||

@ -551,7 +551,7 @@ This auto-tagging feature enhances retrieval by adding another layer of domain-s

|

||||

passwordDescription:

|

||||

'Please enter your current password to change your password.',

|

||||

model: 'Model providers',

|

||||

modelDescription: 'Set the model parameter and API KEY here.',

|

||||

modelDescription: 'Configure model parameters and API KEY here.',

|

||||

team: 'Team',

|

||||

system: 'System',

|

||||

logout: 'Log out',

|

||||

|

||||

@ -517,7 +517,7 @@ export default {

|

||||

keywordTip: `應用LLM分析使用者的問題,提取在相關性計算中需要強調的關鍵字。`,

|

||||

reasoning: '推理',

|

||||

reasoningTip:

|

||||

'是否像 DeepSeek-R1 / OpenAI o1 一樣通過推理產生答案。啟用後,允許模型在遇到未知情況時將代理搜索過程整合到推理工作流中,自行動態檢索外部知識,並通過推理生成最終答案。',

|

||||

'在問答過程中是否啟用推理工作流程,例如Deepseek-R1或OpenAI o1等模型所採用的方式。啟用後,該功能允許模型存取外部知識,並借助思維鏈推理等技術逐步解決複雜問題。通過將問題分解為可處理的步驟,這種方法增強了模型提供準確回答的能力,從而在需要邏輯推理和多步思考的任務上表現更優。',

|

||||

tavilyApiKeyTip:

|

||||

'如果 API 金鑰設定正確,它將利用 Tavily 進行網路搜尋作為知識庫的補充。',

|

||||

tavilyApiKeyMessage: '請輸入你的 Tavily API Key',

|

||||

|

||||

@ -534,7 +534,7 @@ General:实体和关系提取提示来自 GitHub - microsoft/graphrag:基于

|

||||

keywordTip: `应用 LLM 分析用户的问题,提取在相关性计算中要强调的关键词。`,

|

||||

reasoning: '推理',

|

||||

reasoningTip:

|

||||

'是否像 Deepseek-R1 / OpenAI o1一样通过推理产生答案。启用后,允许模型在遇到未知情况时将代理搜索过程集成到推理工作流中,自行动态检索外部知识,并通过推理生成最终答案。',

|

||||

'在问答过程中是否启用推理工作流,例如Deepseek-R1或OpenAI o1等模型所采用的方式。启用后,该功能允许模型访问外部知识,并借助思维链推理等技术逐步解决复杂问题。通过将问题分解为可处理的步骤,这种方法增强了模型提供准确回答的能力,从而在需要逻辑推理和多步思考的任务上表现更优。',

|

||||

tavilyApiKeyTip:

|

||||

'如果 API 密钥设置正确,它将利用 Tavily 进行网络搜索作为知识库的补充。',

|

||||

tavilyApiKeyMessage: '请输入你的 Tavily API Key',

|

||||

|

||||

@ -23,7 +23,7 @@ export function ChatPromptEngine() {

|

||||

|

||||

return (

|

||||

<section>

|

||||

<Subhead>Prompt Engine</Subhead>

|

||||

<Subhead>Prompt engine</Subhead>

|

||||

<div className="space-y-8">

|

||||

<FormField

|

||||

control={form.control}

|

||||

|

||||

Loading…

x

Reference in New Issue

Block a user